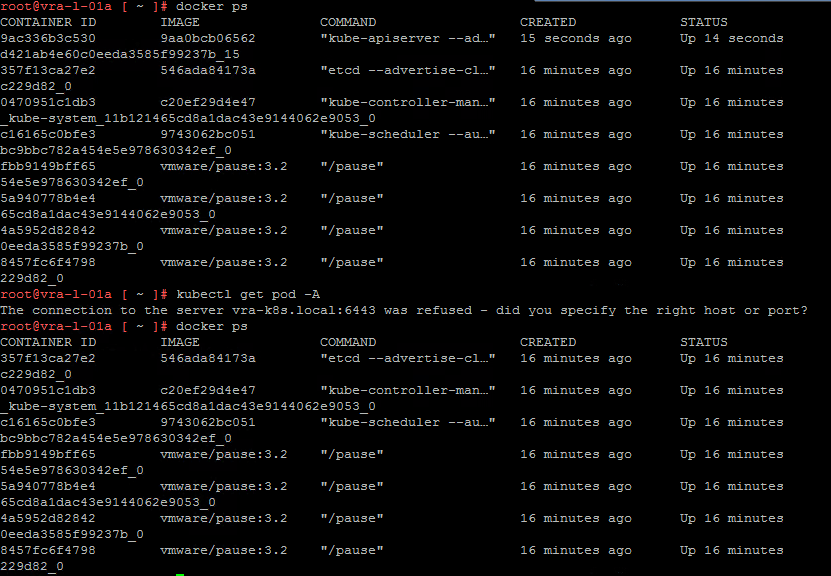

I recently had to bring up an old lab environment that was using an older version of vRA (8.8), but at appliance boot the Kubernetes API-Server containers continuously rebooted and I got an error with kubectl. This post covers some of the troubleshooting steps taken and how it was resolved, ultimately it was caused by an expired certificate.

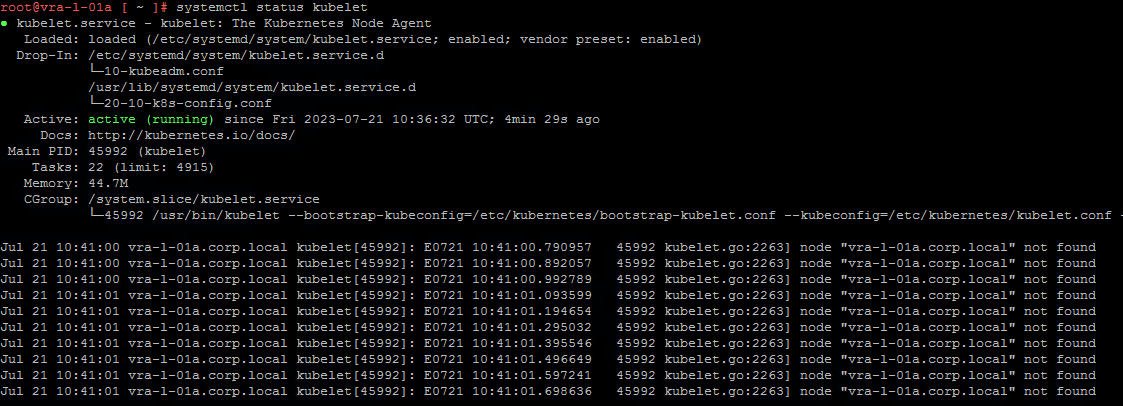

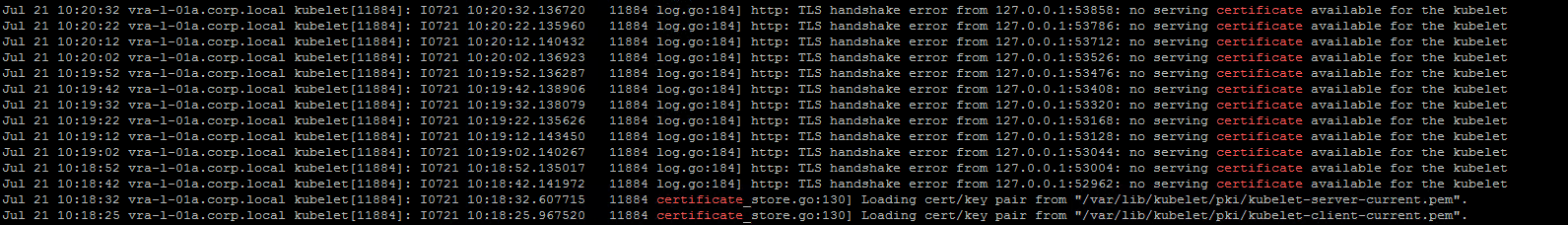

I checked the service status for both kubelet and docker, both were active and running. Next I checked kubelet with journalctl - journalctl -u kubelet -r, the -r flag shows us the newest messages first.

There was a lot of repetitive logs stating the DNS name of our node was not found, but in amongst the noise there was also TLS and certificate related messages, include the path to the pem file that it was trying to use.

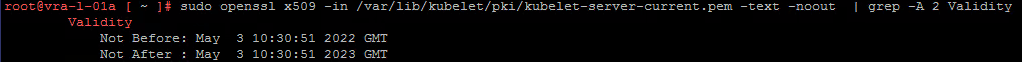

I then used an OpenSSL command to check the validity of the certificate: sudo openssl x509 -in /var/lib/kubelet/pki/kubelet-server-current.pem -text -noout | grep -A 2 Validity - this showed us that the certificate had expired.

After some digging I came across the following VMware KB article, although the symptoms were not the same it did mention that the certificate had expired after a year, which did match what I was seeing - https://kb.vmware.com/s/article/82378

I am using a single vRA Appliance, so I followed the relevant steps within the KB, however I had some issues, which is the purpose of this post, the adjustments I made are below, along with the exact commands I ran…

- Take a snapshot of the vRA VM.

- Locate an etcd backup at

/data/etcd-backup/and copy the selected backup to/root.- I ran

ls -lh /data/etcd-backup/- this showed me the available backups in date order (oldest to newest). I picked the most recent backup (not the .part file). - I copied this to root with

cp /data/etcd-backup/backup-1679068501.db /root. - I checked the backup was in root with

ls /root.

- I ran

- Reset Kubernetes by running

vracli cluster leave.- This takes a little while to run.

- Restore the etcd backup in

/rootby using the/opt/scripts/recover_etcd.shcommand. Example:/opt/scripts/recover_etcd.sh --confirm /root/backup-123456789.db.- I ran

/opt/scripts/recover_etcd.sh --confirm /root/backup-1679068501.db- the same as the example from VMware, updated with my backup file name.

- I ran

- Extract VA config from etcd with:

kubectl get vaconfig -o yaml --export > /root/vaconfig.yaml.- This is the first step where I had issues, initially I had the error Error: unknown flag: - -export, so I removed the flag from the command.

- I then got the same error I started with The connection to the server vra-k8s.local:6443 was refused - did you specify the right host or port?.

- I ran through the same initial troubleshooting steps, I checked docker (all good) and kubelet (not started).

- I started the kubelet service with

systemctl start kubeletand kept an eye on the status to ensure it stayed running. - I check with

docker psand could see the kube-apiserver container was running so then ran thekubectl get vaconfig -o yaml > /root/vaconfig.yamlcommand - this time it was successful. - I validated that the /root/vaconfig.yaml file had contents with

cat /root/vaconfig.yaml.

- Reset Kubernetes once again using:

vracli cluster leave.- Again this takes a little while to run.

- Run to Install the VA config:

kubectl apply -f /root/vaconfig.yaml --force.- The server seemed to be running after the previous leave step, but check its status at this point and wait for the kube-apiserver container to exist and be running.

- Run

vracli licenseto confirm that VA config is installed properly. - Run:

/opt/scripts/deploy.sh.- This takes a while time to run, but you should see that prelude has been successfully deployed.

- I had issues accessing the vRA UI, so I rebooted and it resolved itself.