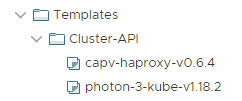

Download the PhotonOS Kubernetes and HAProxy images from here http://storage.googleapis.com/capv-images

HAProxy version used in the steps below: http://storage.googleapis.com/capv-images/extra/haproxy/release/v0.6.4/capv-haproxy-v0.6.4.ova

PhotonOS Kubernetes version used in the steps below: http://storage.googleapis.com/capv-images/release/v1.18.2/photon-3-kube-v1.18.2.ova

Deploy both OVA files in to vSphere, take a snapshot of them, and then covert to templates.

Create a new CentOS or Ubuntu VM to act as a temporary bootstrap server - steps below will be focused on CentOS. Alternatively, install the components below on your current device.

This machine will be used in later stages of your cluster lifecycle management, due to the presence of clusterctl

Install Docker

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.sh

MAINUSER=$(logname)

sudo usermod -aG docker $MAINUSERInstall KIND

KINDVERSION=$(curl -s https://github.com/kubernetes-sigs/kind/releases/latest/download 2>&1 | grep -Po [0-9]+\.[0-9]+\.[0-9]+)

curl -L "https://github.com/kubernetes-sigs/kind/releases/download/v$KINDVERSION/kind-$(uname -s | tr '[:upper:]' '[:lower:]')-$(dpkg --print-architecture)" -o /usr/local/bin/kind

chmod +x /usr/local/bin/kindInstall kubectl

curl -LO "https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl"

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectlInstall clusterctl

CLUSTERCTLVERSION=$(curl -s https://github.com/kubernetes-sigs/cluster-api/releases/latest/download 2>&1 | grep -Po [0-9]+\.[0-9]+\.[0-9]+)

curl -L "https://github.com/kubernetes-sigs/cluster-api/releases/download/v$CLUSTERCTLVERSION/clusterctl-$(uname -s | tr '[:upper:]' '[:lower:]')-$(dpkg --print-architecture)" -o /usr/local/bin/clusterctl

chmod +x /usr/local/bin/clusterctlCreate KIND cluster

kind create clusterCreate clusterctl.yaml to store configuration values. Replace the values where required. Take particular note of values between < and >.

mkdir -p ~/.cluster-api

tee ~/.cluster-api/clusterctl.yaml >/dev/null <<EOF

## -- Controller settings -- ##

VSPHERE_USERNAME: "[email protected]" # The username used to access the remote vSphere endpoint

VSPHERE_PASSWORD: "vmware1" # The password used to access the remote vSphere endpoint

## -- Required workload cluster default settings -- ##

VSPHERE_SERVER: "vcenter1.domain.com" # The vCenter server IP or FQDN

VSPHERE_DATACENTER: "Datacenter" # The vSphere datacenter to deploy the management cluster on

VSPHERE_DATASTORE: "Datastore" # The vSphere datastore to deploy the management cluster on

VSPHERE_NETWORK: "VM Network" # The VM network to deploy the management cluster on

VSPHERE_RESOURCE_POOL: "<ClusterName>/Resources/<ResourcePoolName>" # The vSphere resource pool for your VMs

VSPHERE_FOLDER: "vm/<FolderName>/<ChildFolderName>" # The VM folder for your VMs. Set to "" to use the root vSphere folder

VSPHERE_TEMPLATE: "photon-3-kube-v1.18.2" # The VM template to use for your management cluster.

VSPHERE_HAPROXY_TEMPLATE: "capv-haproxy-v0.6.4" # The VM template to use for the HAProxy load balancer

VSPHERE_SSH_AUTHORIZED_KEY: "<ssh key>" # The public ssh authorized key on all machines in this cluster. Set to "" if you don't want to enable SSH, or are using another solution.

EOFInitialise and Create the Bootstrap Management Cluster. Change the number of control-plane and worker nodes, kubernetes version etc as appropriate.

clusterctl init --infrastructure vsphere

# if this fails use clusterctl init --infrastructure=vsphere:v0.6.6

mkdir -p ~/local-control-plane

clusterctl config cluster local-control-plane --infrastructure vsphere --kubernetes-version v1.18.2 --control-plane-machine-count 1 --worker-machine-count 1 > ~/local-control-plane/cluster.yamlReview the cluster config file and make any required changes. Some suggestions to review are CPU, Memory, Storage and Pods CIDR range.

You can use sed to do a find and replace within the file, the below examples are for changing the Storage and Memory on all virtual machines as part of this deployment and the Pods CIDR.

- Pod CIDR from

192.168.0.0/16to192.168.100.0/24 - Memory from

8192to4096 - Storage from

25to20

sed -i "s/192.168.0.0\/16/192.168.100.0\/24/g" ~/local-control-plane/cluster.yaml

sed -i "s/memoryMiB: 8192/memoryMiB: 4096/g" ~/local-control-plane/cluster.yaml

sed -i "s/diskGiB: 25/diskGiB: 20/g" ~/local-control-plane/cluster.yamlDeploy the cluster components. Monitor the deployment of virtual machines within vSphere.

kubectl apply -f ~/local-control-plane/cluster.yamlUse kubectl to see cluster progress. The control planes won’t be Ready until we install a CNI in a later step.

kubectl get cluster --all-namespaces

kubectl get kubeadmcontrolplane --all-namespacesRetrieve the kubeconfig file for the local-control-plane cluster, and set it as KUBECONFIG environment variable.

kubectl get secret local-control-plane-kubeconfig -o=jsonpath='{.data.value}' | { base64 -d 2>/dev/null || base64 -D; } > ~/local-control-plane/kubeconfig

export KUBECONFIG="$HOME/local-control-plane/kubeconfig"Deploy Calico CNI to local-control-plane.

Because we have set KUBECONFIG in the above step our kubectl commands will run against the local-control-plane cluster. If you want to be certain, add --kubeconfig="$HOME/local-control-plane/kubeconfig" to the commands.

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

#Example with kubeconfig specified

#kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml --kubeconfig="$HOME/local-control-plane/kubeconfig"Now we will initialise the local-control-plane cluster as our cluster-api management cluster.

clusterctl init --infrastructure vsphereRevert back to the kubeconfig for the bootstrap cluster, migrate management components from it to the local-control-plane cluster, and finally delete.

unset KUBECONFIG

clusterctl move --to-kubeconfig="$HOME/local-control-plane/kubeconfig"

kind delete cluster --name bootstrapSet the local-control-plane kubeconfig as our default

cp ~/local-control-plane/kubeconfig ~/.kube/configYou will now be able to perform cluster management operations. Some examples below.

#Retrieve related vSphere VMs

kubectl get vspheremachine

#Retrieve related machines

kubectl get machine

#Retrieve additional machine details

kubectl describe machine <machine-name>

#Retrieve additional cluster information

kubectl describe cluster local-control-plane

#Get machinedeployment (replicaset equivalent for machines)

kubectl get machinedeployment

#Scale worker nodes machinedeployment

kubectl scale machinedeployment local-control-plane-md-0 --replicas=3

#Get various machine resources at once

kubectl get cluster,machine,machinesets,machinedeployment,vspheremachine

#Get most/all Cluster API resources

kubectl get clusters,machinedeployments,machinehealthchecks,machines,machinesets,providers,kubeadmcontrolplanes,machinepools,haproxyloadbalancers,vsphereclusters,vspheremachines,vspheremachinetemplates,vspherevmsThe next article will cover deploying a workload cluster that can be consumed by your development teams.

Next Article in this Series: Cluster API Workload Cluster (vSphere)

Reference: The Cluster API project